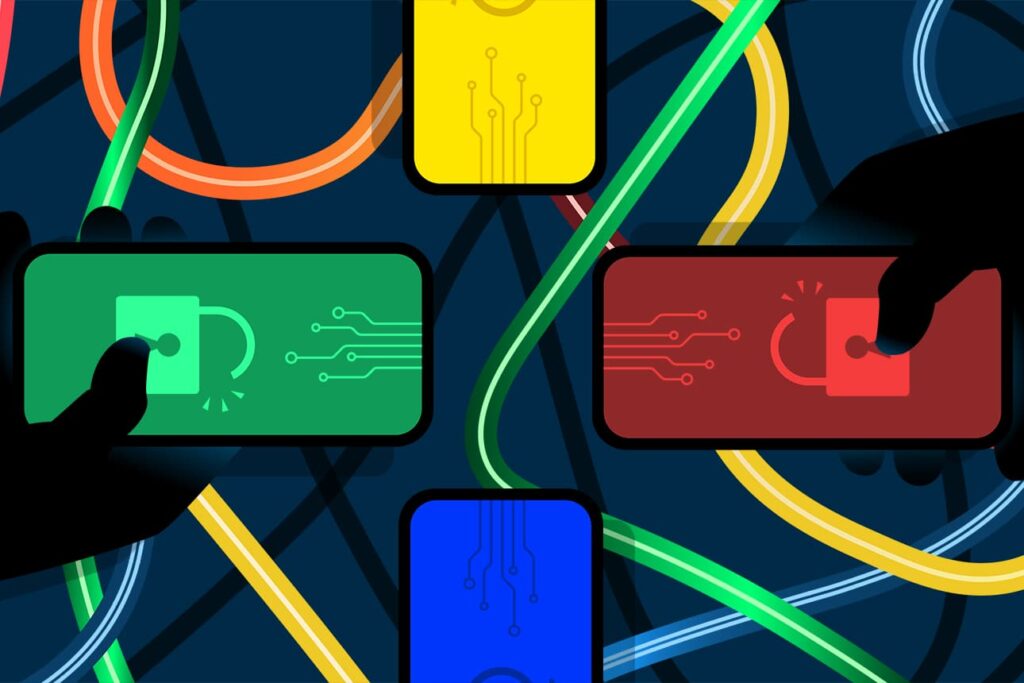

The Cyberspace Administration of China (CAC) has unveiled a comprehensive set of draft regulations aimed at curbing the potential psychological risks posed by increasingly sophisticated artificial intelligence. This move represents a significant pivot in the governance of large language models (LLMs), shifting the focus from mere factual accuracy and political alignment to the nuanced realm of "emotional safety." By targeting "human-like interactive AI services," Beijing is positioning itself as the first global power to formally regulate the anthropomorphic characteristics of digital entities, specifically those designed to simulate human personality and forge emotional bonds with users.

The proposed guidelines, released for public comment through late January, come at a critical juncture for the Chinese tech sector. As domestic firms race to monetize "AI companions" and virtual celebrities, the government is signaling that the era of unfettered emotional experimentation is coming to an end. The measures are designed to prevent AI from exerting undue influence over human emotions, with a particular emphasis on preventing outcomes related to self-harm, suicide, and illegal activities such as gambling. This regulatory expansion suggests that as AI becomes more indistinguishable from human interaction, the legal frameworks governing it must evolve from data protection to psychological safeguarding.

Under the new framework, any AI product or service offered to the Chinese public that utilizes text, images, audio, or video to simulate a human persona will fall under strict scrutiny. The draft rules explicitly prohibit AI from inducing users into behaviors that could lead to physical or mental harm. Experts in the field note that this marks a "leap from content safety to emotional safety." While previous regulations focused on preventing the generation of "fake news" or politically sensitive content, these new rules acknowledge that the danger of AI lies not just in what it says, but in how it makes a person feel. The psychological dependency formed through long-term interaction with a digital "girlfriend" or "mentor" creates a vulnerability that regulators are now moving to protect.

The economic implications of these rules are immediate and profound, particularly for the burgeoning ecosystem of Chinese AI startups. The announcement arrived just as two of the country’s most prominent AI unicorns, Minimax and Z.ai (operating under the name Knowledge Atlas Technology), filed for initial public offerings (IPOs) on the Hong Kong Stock Exchange. Minimax, which has seen explosive growth with its "Talkie" app, reported an average of over 20 million monthly active users in the first three quarters of the year. The app allows users to engage in deep, often emotionally charged conversations with virtual characters. Similarly, Z.ai has claimed that its technology empowers approximately 80 million devices, ranging from smartphones to smart vehicles.

For these companies, the draft rules introduce a layer of operational complexity that could impact their valuations. The regulations mandate that any AI service with more than 1 million registered users or 100,000 monthly active users must undergo a rigorous security assessment. Furthermore, the CAC is proposing a "two-hour rule," requiring service providers to issue reminders to users after two hours of continuous interaction. This move mirrors China’s previous crackdowns on the gaming industry, where time limits were implemented to combat digital addiction among youth. By treating AI companionship with the same level of caution as online gaming, Beijing is treating "emotional engagement" as a potentially addictive and regulated substance.

The rise of the "loneliness economy" in China has fueled the rapid adoption of these AI companions. In a society characterized by high-pressure work environments and shifting social structures, millions of young people have turned to AI for companionship. Tech giants like Baidu have leaned into this trend with products like Wantalk, which offers virtual personas tailored to individual user preferences. However, the psychological risks are becoming harder to ignore. Global reports of users developing unhealthy attachments to AI—and in some tragic cases, being encouraged by chatbots to engage in self-harm—have created a sense of urgency for regulators.

From a global perspective, China’s proactive stance contrasts sharply with the regulatory environments in the United States and the European Union. While the EU’s AI Act focuses on a risk-based categorization of technology, emphasizing transparency and data bias, it has yet to implement specific restrictions on the anthropomorphic or emotional manipulation of users. The United States, meanwhile, has largely relied on voluntary commitments from tech companies and existing consumer protection laws. China’s move to codify "human-like" interaction standards could set a precedent for how other nations handle the blurring lines between biological and synthetic social interaction.

Market analysts suggest that while these regulations may seem restrictive, they could provide a much-needed "safety floor" for the industry. By establishing clear boundaries, the government may be attempting to prevent a major social backlash that could lead to even more draconian bans in the future. The draft rules do not solely focus on prohibitions; they also encourage the development of AI for "cultural dissemination and elderly companionship." This suggests a dual-track strategy: curbing the risks of the "loneliness economy" while harnessing the technology to address the challenges of China’s rapidly aging population.

Technically, the implementation of these rules will require significant advances in AI auditing. Detecting whether a chatbot is subtly "influencing" a user toward gambling or self-harm is far more difficult than filtering for specific keywords. It requires a sophisticated understanding of context, sentiment, and the long-term trajectory of a conversation. Companies will likely need to invest heavily in "safety-by-design" architectures, utilizing Reinforcement Learning from Human Feedback (RLHF) to bake ethical and emotional guardrails directly into their models. This added R&D cost could favor established players with deep pockets over smaller startups, potentially leading to further consolidation in the Chinese AI market.

The geopolitical dimension of these rules cannot be overlooked. As the US and China compete for AI supremacy, the battle is being fought not just on the front of computing power and chip dominance, but also on the front of global standards. If China successfully implements a working model for "emotional AI" regulation, it could export these standards to other jurisdictions, particularly in markets that look to Beijing’s tech governance as a blueprint. This "regulatory diplomacy" is a key component of China’s broader ambition to become the world’s leading AI innovator by 2030.

As the public comment period progresses toward the January 25 deadline, the tech community will be watching closely for any modifications to the "two-hour" limit and the specific criteria for security assessments. For investors, the focus remains on the Hong Kong IPOs of Minimax and Z.ai. If these companies can demonstrate a clear path to compliance without sacrificing user engagement, it could validate the commercial viability of "regulated" emotional AI. However, if the compliance burden proves too high, it may dampen the enthusiasm for what was once considered one of the most promising sectors of the AI revolution.

Ultimately, the CAC’s draft rules represent a recognition that AI is no longer just a tool for productivity; it is a social actor. By stepping in to regulate the "personality" of machines, China is embarking on a massive social experiment to see if the state can successfully mediate the relationship between humans and their digital creations. In the high-stakes race for technological dominance, the winner may not be the one with the smartest AI, but the one who can best manage the profound psychological impact these machines have on the human soul.