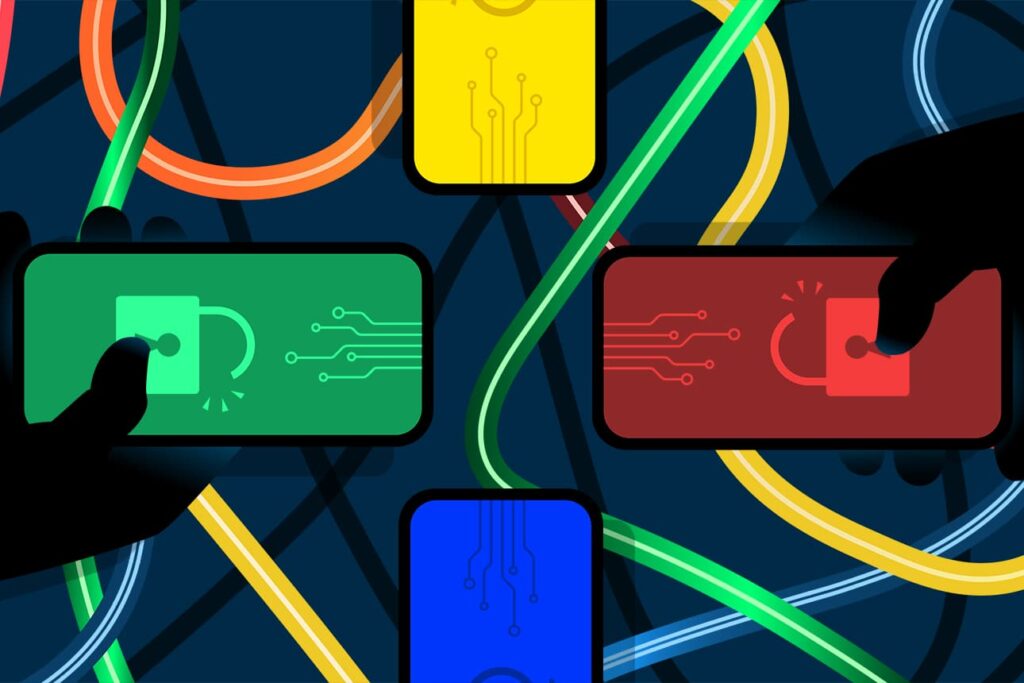

The rapid ascent of generative artificial intelligence (AI) in software development has ignited a transformative wave across the global tech landscape, promising unprecedented boosts in developer productivity and project velocity. From automating boilerplate code generation to offering intelligent suggestions for complex algorithms, AI-powered coding tools are quickly becoming indispensable assets for engineering teams worldwide. Industry analysts project that the integration of AI could elevate developer efficiency by as much as 30-50% in certain tasks, translating into faster time-to-market for new products and services, and a significant reduction in development cycles. Venture capital firms are pouring billions into AI development platforms, fueling an arms race among tech giants to deliver the most sophisticated coding assistants. However, amidst this fervent adoption and the undeniable immediate gains, a subtle yet profound paradox emerges: these very tools, designed to accelerate progress, harbor the potential to accumulate substantial technical debt, threatening to cripple systems and stifle innovation months or even years down the line.

The allure of AI-driven productivity is undeniable. Developers can leverage large language models (LLMs) to generate entire code blocks, identify and fix bugs, refactor existing code, and even translate code between different programming languages. This automation frees up valuable human capital, allowing engineers to focus on higher-level architectural challenges, creative problem-solving, and strategic innovation. Global tech leaders, from established enterprises to agile startups, are increasingly mandating the use of these tools, driven by competitive pressures to accelerate their digital transformation initiatives. A recent survey indicated that over 70% of software development organizations are either actively experimenting with or have already integrated generative AI into their workflows, with many reporting initial surges in code output. This immediate gratification, however, often overshadows a critical long-term concern: the quality and future maintainability of the AI-generated code, particularly when integrated into complex, existing systems.

Technical debt, in its essence, represents the future rework required to address shortcuts or suboptimal decisions made during current software development. It’s akin to taking out a loan: you get the immediate benefit, but accrue interest over time. This debt manifests in various forms: poor code quality, inadequate documentation, architectural inconsistencies, and design flaws. While human developers are certainly capable of introducing technical debt, generative AI presents a unique set of challenges that can accelerate its accumulation at an alarming rate, especially in "brownfield" environments—systems built on legacy codebases with complex interdependencies, undocumented quirks, and deeply entrenched architectural patterns.

One primary concern stems from the AI’s limited contextual understanding. While an LLM can generate syntactically correct code, it may lack the nuanced understanding of a specific system’s architecture, business logic, or historical constraints. This often results in code that is functional but inefficient, overly complex, or misaligned with established design principles. Such code can introduce subtle bugs, performance bottlenecks, and security vulnerabilities that are difficult for human developers to detect during routine code reviews, precisely because they appear superficially correct. Moreover, the sheer volume of code that AI can produce exacerbates the problem. A larger codebase, even if partially AI-generated, inherently increases complexity, making it harder to navigate, debug, and update, leading to higher maintenance costs and slower future development.

The "black box" nature of AI-generated code also contributes to this problem. Developers might struggle to fully comprehend the rationale behind AI’s suggestions or generated segments, leading to a reduced sense of ownership and accountability. When bugs inevitably emerge in these AI-assisted sections, diagnosing and resolving them can be significantly more time-consuming and costly, as engineers must decipher not only the code but also the underlying (and often opaque) logic that generated it. This can lead to what some experts term "cognitive debt," where the burden of understanding and verifying AI-generated output outweighs the initial productivity gains. The implications extend to developer skill degradation; an over-reliance on AI for core coding tasks could diminish human developers’ critical thinking, problem-solving abilities, and deep understanding of foundational programming concepts, leaving them ill-equipped to tackle complex, novel challenges or manage intricate legacy systems.

The economic implications of unchecked technical debt are substantial and far-reaching. For businesses, escalating technical debt translates directly into increased operational costs. Resources that should be dedicated to innovation and new feature development are instead diverted to debugging, refactoring, and maintaining brittle systems. This can lead to slower product cycles, delayed market entry for new offerings, and a diminished competitive edge. A study by McKinsey & Company estimated that poor code quality and technical debt cost the global economy trillions of dollars annually in lost productivity and increased IT expenditure. Companies burdened by high technical debt also face challenges in talent retention; developers are often demotivated by working on antiquated, difficult-to-maintain codebases, leading to higher attrition rates and increased recruitment costs. Furthermore, in highly regulated industries like finance and healthcare, poor code quality can lead to compliance failures, data breaches, and severe reputational damage, compounding financial losses with legal and regulatory penalties.

Navigating this intricate landscape requires a strategic, disciplined approach that maximizes AI’s benefits while rigorously mitigating its inherent risks. As highlighted by experts such as Dartmouth College professor Geoffrey Parker and Culture Amp co-founder and CTO Doug English, companies must establish clear directives and robust guardrails for engineers utilizing AI coding tools. This begins with acknowledging AI as an assistant, not a replacement, for human intellect and judgment. The "human-in-the-loop" principle is paramount, ensuring that every piece of AI-generated code undergoes stringent human review, verification, and validation.

Key strategies for effective AI integration include:

- Rigorous Code Review Processes: Implementing enhanced code review protocols specifically designed to scrutinize AI-generated output for efficiency, adherence to architectural patterns, security vulnerabilities, and contextual relevance.

- Strategic Application: Prioritizing the use of generative AI for greenfield projects or well-defined, isolated tasks where the risk of introducing systemic debt is lower. In brownfield environments, AI should be used with extreme caution, perhaps limited to refactoring small, self-contained modules or generating unit tests rather than core business logic.

- Developer Education and Training: Equipping engineers with the skills to effectively prompt AI tools, critically evaluate their output, and understand the potential pitfalls. This includes training on advanced testing methodologies and static code analysis tools that can detect common AI-induced errors.

- Automated Quality Gates: Implementing continuous integration/continuous deployment (CI/CD) pipelines with automated quality gates that include comprehensive testing, static analysis, and security scanning tools to catch issues early in the development cycle.

- Architectural Governance: Establishing strong architectural governance frameworks that ensure all AI-generated components align with the overall system architecture, design principles, and coding standards. This helps maintain consistency and reduces fragmentation.

- Observability and Monitoring: Deploying robust observability and monitoring solutions to track the performance, stability, and maintainability of AI-assisted code in production environments, allowing for early detection of issues.

- Clear Ownership and Accountability: Defining clear lines of responsibility for AI-generated code, ensuring that human developers remain ultimately accountable for the quality and integrity of the software.

Ultimately, generative AI offers an unprecedented opportunity to redefine software development, unlocking new levels of creativity and efficiency. However, realizing its full potential demands more than simply adopting the latest tools; it requires a profound shift in organizational culture towards prioritizing long-term code health over short-term velocity. By understanding and proactively managing the inherent risks of technical debt, particularly in the context of AI-assisted development, businesses can ensure that their pursuit of digital innovation does not inadvertently lay the groundwork for future systemic fragility, securing a more robust and sustainable technological future.