In a significant escalation of regulatory pressure, the Ministry of Electronics and Information Technology (MeitY) has issued a stern advisory to major social media platforms, demanding immediate and rigorous improvements in their handling of sexually explicit material or face severe legal repercussions. This latest directive, delivered late on a Monday, underscores New Delhi’s hardening stance against what it perceives as "below par" content moderation efforts, signaling a potential shift from advisories to aggressive enforcement actions in the digital realm. The advisory explicitly warns that a failure to enhance measures for identifying, flagging, and expeditiously removing objectionable content could result in platforms losing their crucial "safe harbour" protections, thereby exposing them to direct legal liability and even criminal charges under the newly enacted Bharatiya Nyaya Sanhita, 2023.

This is not an isolated incident but rather the third major regulatory intervention by the Indian government this year concerning digital content. Earlier in February, the Ministry of Information & Broadcasting had similarly cautioned over-the-top (OTT) streaming services and social media platforms to strictly adhere to rules against sexually explicit content. Following up on that, July saw a decisive ban on more than 20 streaming platforms, accompanied by a dossier of evidence indicating that these entities had knowingly facilitated the dissemination of explicit material. Such a consistent pattern of warnings and actions highlights a systemic concern within government circles, fueled by widespread public discourse, representations from various stakeholders, and observations from the judiciary regarding the proliferation of content that violates norms of decency and obscenity. The government emphasizes the need for digital platforms to uphold their due diligence obligations, particularly concerning content deemed obscene, indecent, vulgar, pornographic, paedophilic, or otherwise harmful to children.

The concept of "safe harbour" protection, enshrined in Section 79 of India’s Information Technology Act, 2000, and elaborated in Rule 3-4 of the IT Rules, 2021, is central to this regulatory debate. This legal provision shields intermediary platforms from liability for user-generated content, provided they comply with specific "due diligence" requirements, including prompt action on content takedown requests. The threat of withdrawing this protection is a potent weapon in the government’s arsenal, as it would fundamentally alter the operating landscape for platforms like Meta’s Facebook and Instagram, Google’s YouTube, and others, making them directly accountable for content posted by their vast user bases. The inclusion of the Bharatiya Nyaya Sanhita, 2023, further amplifies the stakes, potentially introducing criminal charges against platform executives or entities themselves, moving beyond civil penalties.

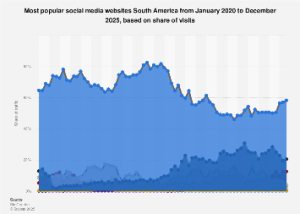

India’s digital market is unparalleled in its scale and growth, presenting both immense opportunity and significant regulatory challenges. With over 400 million monthly active users, India represents the largest market globally for platforms such as Facebook, Instagram, and YouTube. This massive user base, while a boon for digital economy growth, also generates an unprecedented volume of user-generated content, making effective moderation a Herculean task. The economic implications of a potential crackdown are substantial. Increased compliance burdens, higher operational costs for content moderation, and the risk of legal battles could deter future investments in India’s digital sector. For global tech giants, navigating India’s increasingly stringent regulatory environment, which balances economic growth with societal concerns and national security, becomes a critical strategic imperative. Smaller, domestic platforms, often with fewer resources for sophisticated moderation systems, could face disproportionate challenges, potentially leading to market consolidation or barriers to entry for new players.

The technological complexity of content moderation at scale is immense. Social media platforms heavily rely on artificial intelligence and machine learning algorithms to detect and filter prohibited content. While these technologies are constantly evolving, they are far from perfect, often struggling with context, nuance, and the sheer volume of new content uploaded every second. The advisory’s mention of "modified content that is sexually explicit" further complicates matters, directly addressing the emerging threat of AI-generated deepfakes and other synthetic media used to create illicit content. This requires sophisticated detection mechanisms that can identify manipulated media, a frontier where even leading AI companies are still developing robust solutions. Furthermore, the reliance on human reviewers, who must sift through vast amounts of disturbing content, raises significant ethical and psychological concerns for platform employees. Meta’s recent transparency report, which noted an increase in the prevalence of adult nudity and sexual activity due to "changes made during the quarter to improve reviewer training and enhance review workflows," inadvertently highlights the inherent difficulties and subjective elements in content categorization and moderation at scale.

Transparency reports, often cited by platforms as evidence of their efforts, offer a glimpse into the ongoing battle against illicit content. Google’s September quarter community guidelines enforcement report, for instance, revealed the removal of 12.1 million YouTube videos globally between July and September, with a staggering 98% removed autonomously by AI. Crucially, over 62% of these removals were attributed to child abuse and pornographic content, underscoring the severity of this particular category. Meta’s corresponding report for the same period flagged 40.4 million pieces of content across Facebook and Instagram for child and adult sexual content. While this figure represents a 15% decrease from the previous quarter, the sheer volume illustrates the persistent challenge. However, a consistent point of contention remains the lack of granular, India-specific data in these global reports, making it difficult for local regulators to assess the effectiveness of moderation efforts within their jurisdiction. This data asymmetry often fuels government frustration and reinforces the perception that platforms are not sufficiently tailored to local regulatory requirements.

Legal and policy experts view the advisory as a strong indication that the government is preparing for a more stringent enforcement phase. Dhruv Garg, a partner at the India Governance and Policy Project, observed that the advisory, while not a new law, serves as a pointed reminder that "safe harbour is contingent on rigorous compliance with due diligence obligations." He emphasized that the timing reflects mounting parliamentary and judicial concerns, prompting the government to clarify that failure to expeditiously identify and remove such material will strip platforms of liability protection and expose them to prosecution. This legal interpretation aligns with a global trend where governments are increasingly seeking to hold digital platforms accountable for the content they host, moving away from a purely hands-off approach. The European Union’s Digital Services Act (DSA) and other international regulations similarly aim to impose greater responsibilities on platforms regarding content moderation, transparency, and user safety, suggesting that India’s actions are part of a broader, global push for digital accountability.

The ultimate impact of this intensified scrutiny will hinge on both the platforms’ response and the government’s willingness to implement its threats. Should platforms fail to demonstrate substantial improvements, New Delhi could proceed with hefty fines, service restrictions, or even outright bans on non-compliant entities. Such actions would not only disrupt India’s burgeoning digital economy but also send a powerful message globally about the limits of platform autonomy in sovereign digital spaces. The ongoing dialogue between the government and Big Tech on issues like AI content labelling suggests an attempt to find common ground, yet the undercurrent of potential punitive action remains strong. Balancing the constitutional right to freedom of speech with the imperative to protect citizens, particularly children, from harmful content is a delicate act. India’s latest move signals a clear prioritization of the latter, demanding a level of compliance and accountability from global tech giants that could redefine the future of content moderation on a global scale.