The rapid proliferation of generative artificial intelligence tools within software development teams represents a watershed moment, promising unprecedented boosts in developer velocity and project turnaround times. From accelerating code generation to automating routine tasks and identifying potential bugs, AI-powered coding assistants are quickly becoming indispensable, with some industry estimates suggesting adoption rates among developers could reach 80% within the next five years. However, beneath this veneer of efficiency lies a complex paradox: while these tools undoubtedly unlock significant productivity gains, they simultaneously introduce a stealthy accumulation of technical debt, threatening to cripple enterprise systems and inflate long-term operational costs if not managed with strategic foresight. This hidden cost, often overlooked in the initial enthusiasm for speed, demands a nuanced understanding and robust governance framework from global enterprises.

The allure of AI in coding is undeniable, driven by compelling promises of enhanced output and reduced time-to-market. Tools like GitHub Copilot, Amazon CodeWhisperer, and Google Gemini Code Assist are designed to integrate seamlessly into integrated development environments (IDEs), offering real-time code suggestions, generating entire functions, and even refactoring existing blocks of code. Early adopters report substantial gains: studies by GitHub, for instance, have indicated that developers using AI assistants complete tasks up to 55% faster, leading to a perception of a significant competitive edge for organizations that embrace these technologies. This immediate gratification fuels a widespread push to embed AI into every facet of the software development lifecycle, from initial design to deployment and maintenance. The global market for AI in software development is projected to grow from billions today to tens of billions within the decade, underscoring the industry’s fervent belief in its transformative power.

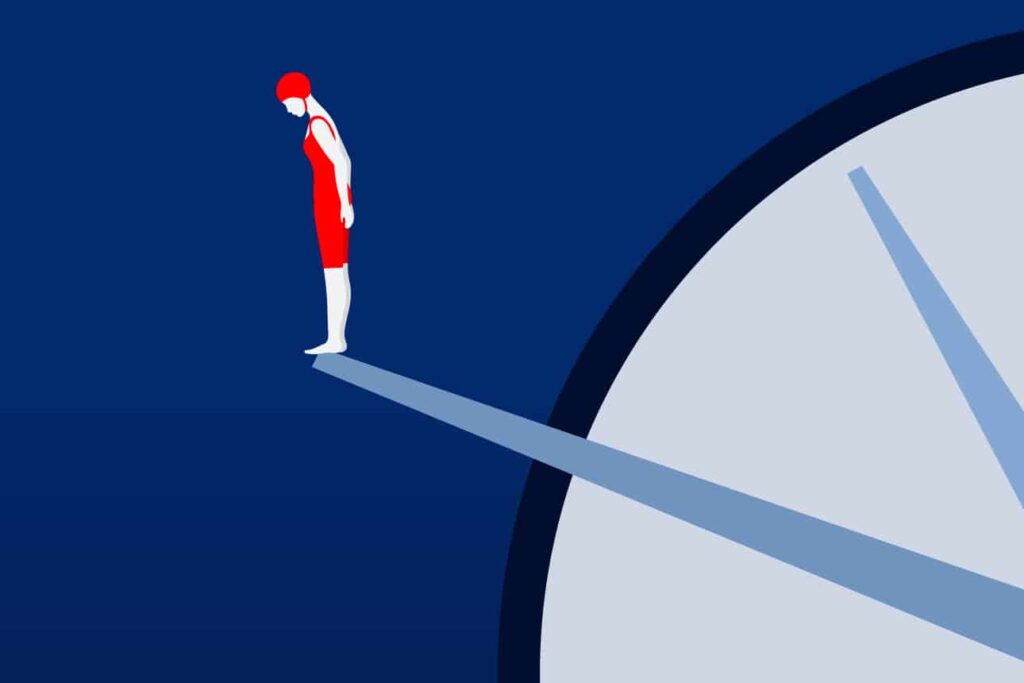

Yet, this accelerated pace carries a significant caveat, particularly when these AI tools are deployed indiscriminately across existing, often intricate and deeply embedded "brownfield" environments. Technical debt, broadly defined as the implied cost of additional rework caused by choosing an easy (limited) solution now instead of using a better approach that would take longer, is a concept well-understood in software engineering. Traditionally, it accrues from rushed deadlines, poor architectural decisions, insufficient documentation, or a lack of developer expertise. Generative AI, while designed to mitigate some of these issues, can paradoxically exacerbate the problem by introducing new, often subtler forms of debt. The very efficiency of AI in generating code can mask underlying quality issues, leading to an accumulation of code that is less readable, harder to maintain, less secure, or poorly optimized for specific system architectures.

The core challenge stems from the nature of AI’s operation. While sophisticated, current generative AI models lack true contextual understanding, architectural foresight, or a deep grasp of specific business logic and legacy system intricacies. They excel at pattern matching and statistical prediction based on vast datasets of existing code. When prompted to generate code, an AI might produce functionally correct output that nonetheless diverges from established coding standards, introduces unnecessary complexity, or fails to integrate seamlessly with existing frameworks. In brownfield environments, where codebases can be decades old, poorly documented, and riddled with historical compromises, AI’s lack of holistic understanding can lead to the generation of code that, while seemingly functional, acts as a foreign body, increasing the entropy of the system and making future modifications exponentially more difficult. This "black box" nature of AI-generated code means developers might integrate sections without fully scrutinizing or comprehending them, leading to sections of the codebase that are essentially unmanageable.

Estimates of the global cost of technical debt are staggering, often running into hundreds of billions of dollars annually across industries. AI-accelerated development, without proper oversight, risks inflating these figures dramatically. The immediate savings in developer hours could be dwarfed by future expenditures on refactoring, debugging, security patches, and system redesigns necessitated by the low-quality, AI-generated code. This creates a dangerous cycle: quick wins today lead to slow, expensive failures tomorrow. Companies that prioritize short-term velocity without investing in robust quality assurance and governance frameworks risk trapping themselves in an ever-growing quagmire of unmaintainable software, eroding their competitive agility and innovation capacity.

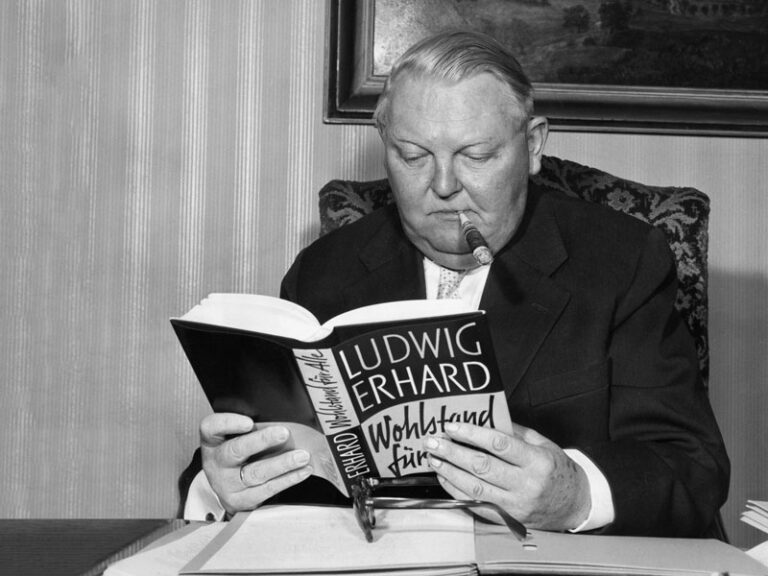

Recognizing this emergent threat, leading experts and forward-thinking organizations are advocating for a strategic, rather than wholesale, adoption of AI coding tools. Dartmouth College professor Geoffrey Parker, known for his work on platform economics and digital transformation, emphasizes the critical need for enterprises to view AI not merely as a productivity enhancer but as a strategic tool requiring careful integration into existing organizational and technical ecosystems. His insights underscore that without a clear understanding of its broader implications for system health and long-term value creation, AI adoption can become a net negative.

This necessitates the establishment of clear directives and robust guardrails. Doug English, co-founder and CTO of Culture Amp, highlights the importance of institutionalizing practices that enable engineers to leverage AI effectively while mitigating its risks. This isn’t about rejecting AI but rather about intelligent deployment. Key mitigation strategies include:

- Enhanced Code Review: Human oversight becomes even more critical. Developers must meticulously review AI-generated code for adherence to architectural patterns, coding standards, efficiency, and security vulnerabilities, treating AI as a junior co-pilot whose output requires rigorous validation.

- Robust Automated Testing: An increased emphasis on comprehensive unit, integration, and end-to-end testing frameworks is paramount. Automated tests can quickly identify regressions or unexpected behaviors introduced by AI-generated code, catching issues before they propagate deep into the system.

- Developer Training and Skill Evolution: Engineers need training not just on how to use AI tools, but crucially, on how to critically evaluate their output, understand their limitations, and develop advanced prompt engineering skills to guide AI towards higher-quality code generation. The role of the developer evolves from pure coder to architect, auditor, and strategic orchestrator of AI capabilities.

- Architectural Governance: Senior architects and technical leaders must define explicit boundaries and best practices for AI integration, especially in brownfield environments. This includes identifying specific modules or types of tasks where AI is most beneficial and least risky, and where manual, human-centric development remains essential.

- Leveraging Static Analysis and Code Quality Tools: Integrating advanced static analysis tools, linters, and code quality metrics into the CI/CD pipeline can automatically flag potential issues in AI-generated code, enforcing standards and identifying technical debt proactively.

- Cultural Shift Towards Quality: Organizations must foster a culture that values long-term code quality, maintainability, and security over fleeting gains in development speed. This means allocating time for refactoring, documentation, and continuous improvement, viewing technical debt as a strategic liability rather than an unavoidable byproduct.

The global economic impact of mismanaged AI in software development could be substantial. Companies that fail to address the technical debt paradox risk spiraling maintenance costs, slower innovation cycles, increased security vulnerabilities, and ultimately, a diminished competitive stance in an increasingly digital economy. Conversely, enterprises that strategically integrate AI, balancing productivity gains with stringent quality controls and robust governance, stand to gain a significant advantage. They will be able to accelerate product development, free human talent for higher-order creative and strategic tasks, and maintain a nimble, resilient technological infrastructure.

In conclusion, the advent of AI coding tools presents an unprecedented opportunity to transform software development. However, realizing this potential requires navigating a delicate balance. The immediate gratification of accelerated development must not overshadow the critical imperative of managing technical debt. By embracing a strategic, disciplined approach—marked by rigorous human oversight, advanced testing, continuous developer education, and clear architectural governance—organizations can harness the power of generative AI to drive innovation without inadvertently building a future shackled by the hidden costs of poor-quality code. The future of software development is undoubtedly AI-augmented, but its success will ultimately hinge on human wisdom, foresight, and an unwavering commitment to engineering excellence.