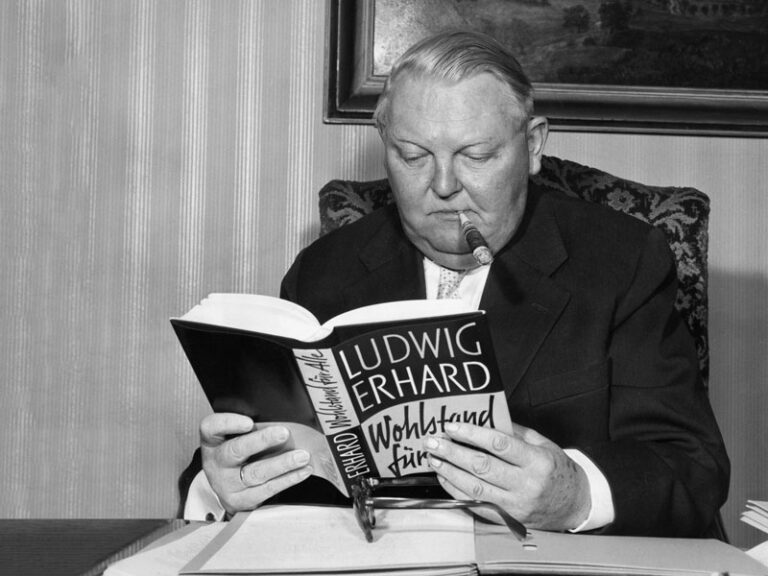

The widespread adoption of generative artificial intelligence in software development promises a revolution in productivity, a vision of accelerated development cycles, reduced time-to-market, and a lighter burden on engineering teams. From automating repetitive tasks to suggesting complex code structures, these tools are rapidly becoming indispensable in the modern developer’s toolkit. However, beneath the veneer of immediate efficiency gains lies a profound paradox: while AI can undeniably boost output, it simultaneously introduces a significant, often invisible, threat – the rapid accumulation of technical debt, which, if left unchecked, could silently cripple enterprise systems and stifle future innovation. This hidden cost, frequently overlooked in the initial enthusiasm, demands a more nuanced and strategic approach to AI integration within the software lifecycle.

The surge in AI-powered coding assistants, such as GitHub Copilot, Amazon CodeWhisperer, and Google’s Duet AI, marks a pivotal shift in how software is engineered. Market analysts project the global AI in software development market to grow at a compound annual growth rate exceeding 25% over the next five years, reaching tens of billions of dollars. This exponential growth is fueled by an acute global shortage of skilled developers, the relentless demand for faster iteration in a competitive digital landscape, and the intrinsic pressure for businesses to accelerate their digital transformation initiatives. Developers are leveraging AI for a myriad of tasks, from generating boilerplate code and unit tests to refactoring existing codebases and even assisting in debugging complex issues. The allure is undeniable: faster code generation means more features delivered, quicker bug fixes, and potentially a significant reduction in development costs.

Yet, this speed comes with a significant caveat, particularly when these tools are deployed within "brownfield" environments – existing, complex, and often legacy software systems. Unlike building from scratch, integrating AI-generated code into established architectures demands a deep understanding of existing logic, dependencies, and implicit business rules. This is where the concept of technical debt, defined as the future work required to address today’s poor-quality or expediently implemented code, becomes critically relevant. While not a new phenomenon, AI has the potential to accelerate its accumulation to an unprecedented degree.

One of the primary ways AI contributes to technical debt is through the generation of code that, while functionally correct, may fall short on crucial non-functional requirements such as maintainability, readability, scalability, and security. Generative AI models are trained on vast datasets of existing code, reflecting common patterns and solutions. However, they lack the human developer’s nuanced understanding of a project’s specific architectural vision, long-term strategic goals, or unique team coding standards. This can lead to verbose, redundant, or inefficient code that works but becomes a significant burden for human engineers to understand, modify, and debug later. Industry studies suggest that up to 30% of a developer’s time is spent on maintaining existing code, a figure that AI-induced technical debt could inflate further.

Moreover, the "black box" nature of AI-generated suggestions can lead developers to uncritically accept code snippets without fully comprehending their underlying logic or potential side effects. This not only introduces opaque sections into the codebase that become difficult to manage but also poses a risk of "skill erosion" among developers. Over-reliance on AI for routine coding tasks could diminish fundamental problem-solving abilities, architectural design skills, and the critical thinking necessary to identify and mitigate complex software issues. As Dartmouth College professor Geoffrey Parker, a renowned expert in platform strategies and digital economies, has highlighted in various discussions, neglecting architectural integrity in favor of rapid feature delivery can lead to systemic vulnerabilities that are far more costly to address down the line. The long-term health of a software system depends on its architectural coherence, a quality that automated tools, without careful human oversight, may inadvertently undermine.

The consequences of unchecked technical debt are far-reaching and economically impactful. Globally, organizations reportedly spend billions annually on addressing technical debt, diverting resources from innovation to remediation. This cost manifests in slower development cycles, an increased incidence of bugs, heightened security vulnerabilities, difficulty scaling applications, and ultimately, a reduced capacity for innovation. For instance, a system riddled with AI-generated, poorly integrated code could become brittle, making it exponentially harder to implement new features or adapt to evolving business requirements. This translates directly into lost market opportunities, diminished competitive advantage, and substantial operational overheads.

To navigate this paradox and harness the true potential of AI in coding, organizations must implement robust strategies and guardrails. Doug English, co-founder and CTO of Culture Amp, whose company has proactively established clear directives for AI tool usage, exemplifies a forward-thinking approach. His insights underscore the necessity of a multi-faceted framework that prioritizes quality and long-term sustainability over immediate gratification.

Key mitigation strategies include:

- Establishing Clear Governance and Policies: Organizations must define explicit guidelines for how AI coding tools are to be used. This includes specifying which tasks are suitable for AI assistance (e.g., boilerplate, unit tests, simple refactoring) and which require full human ownership (e.g., core business logic, security-critical components, complex architectural changes). These policies should be regularly updated and communicated across engineering teams.

- Enhancing Code Review Processes: Far from reducing the need for code reviews, AI-generated code necessitates more rigorous scrutiny. Reviewers must focus not just on functional correctness but also on maintainability, adherence to coding standards, architectural fit, and potential security vulnerabilities. This might involve pairing human reviewers with advanced static analysis tools specifically trained to identify common AI-generated code patterns that lead to debt.

- Investing in Developer Training and Upskilling: Engineers need to be trained not just on how to use AI tools, but critically, on how to evaluate their output. This includes understanding the limitations of generative models, developing prompt engineering skills to guide AI effectively, and reinforcing foundational software engineering principles to discern high-quality code from merely functional code. The goal is to cultivate "AI-augmented developers" who leverage tools as assistants, not as substitutes for critical thinking.

- Integrating Automated Quality Checks: Robust CI/CD pipelines must be fortified with advanced static analysis, dynamic analysis, and comprehensive automated testing suites. These tools can act as crucial checkpoints, identifying potential issues in AI-generated code before it enters the main codebase. Linting tools, security scanners, and performance profilers should be standard practice.

- Strategic Application and "Safe Zones": Companies should strategically identify "safe zones" where AI assistance provides maximum benefit with minimal risk. This might include generating documentation, creating simple utility functions, or prototyping new ideas. Conversely, high-risk zones, such as sensitive data handling, complex algorithms, or core intellectual property, should remain primarily human-driven with AI serving as a supplementary, highly supervised aid.

- Monitoring and Metrics: Organizations must establish metrics to track the impact of AI tools on code quality, technical debt accumulation, and overall development efficiency. This includes monitoring bug rates, maintenance costs, security vulnerabilities, and developer satisfaction to ensure that AI adoption is genuinely beneficial and not merely shifting costs to the future.

The global landscape underscores the urgency of this balanced approach. In heavily regulated industries like finance and healthcare, the risks of AI-induced technical debt extend beyond operational inefficiency to regulatory non-compliance and severe reputational damage. In highly competitive tech sectors, the ability to rapidly innovate without accruing crippling debt will be a decisive factor in market leadership. Companies that proactively manage the technical debt associated with AI coding tools will gain a significant competitive edge, capable of sustained innovation and agility. Conversely, those that fall into the "productivity trap" risk becoming bogged down by legacy code, unable to adapt to new demands or fend off more nimble competitors.

Ultimately, the advent of AI coding tools represents a powerful evolutionary step in software development, offering undeniable opportunities for efficiency and acceleration. However, this power must be wielded with caution and foresight. The future of software engineering lies not in blindly embracing every AI-generated suggestion, but in a disciplined, strategic integration that prioritizes long-term architectural health and code quality. By establishing clear guardrails, fostering critical human oversight, and investing in robust processes, enterprises can leverage AI to build resilient, innovative systems, avoiding the hidden costs that could otherwise derail their digital ambitions. The paradox, then, is resolved through judicious governance, transforming potential pitfalls into pathways for sustainable growth.