The pursuit of artificial general intelligence (AGI), machines capable of understanding, learning, and applying knowledge across a wide range of tasks with human-like flexibility, is rapidly evolving, bifurcating into two primary, yet fundamentally different, research trajectories. One path leverages the exponential growth of large language models (LLMs), aiming to achieve generality through sheer scale and sophisticated architectures. The other, more biologically inspired, seeks to meticulously reconstruct the human brain in a digital format through whole brain emulation (WBE). This divergence presents a fascinating dichotomy, with billions of dollars in investment and profound implications for the future of technology and humanity itself.

The genesis of the LLM revolution can be traced back to a seminal 2017 research paper from Google, "Attention Is All You Need." This work introduced the "transformer" architecture, a breakthrough that enabled machines to process and understand language with unprecedented efficiency. This innovation laid the groundwork for the development of sophisticated LLMs, exemplified by OpenAI’s ChatGPT, which can engage in reasoning, translation, coding, and remarkably fluid conversation. The underlying principle, first hinted at in Google’s 2016 paper "Exploring the Limits of Language Modeling," is that scaling up neural networks – in terms of data volume, parameter count, and computational power – leads to a predictable and continuous improvement in performance. This convergence of architectural innovation and massive scaling has propelled generative AI to the forefront of technological advancement, underpinning much of the current AI research landscape. The question now is whether this emergent intelligence, derived from patterns in vast datasets, can truly achieve the broad cognitive capabilities of AGI.

While LLMs exhibit impressive proficiency in various domains, their intelligence remains largely disembodied. They lack direct interaction with the physical world, persistent memory analogous to human recollection, and the capacity for self-directed goal formation. Critics argue that true intelligence is deeply rooted in embodied experience – the continuous process of perceiving, interacting with, and learning from our environment. If AGI is to emerge from this paradigm, it may require more than just linguistic prowess; it will likely necessitate integration with perceptual systems, embodiment, and continuous, adaptive learning mechanisms. The current trajectory of LLMs, therefore, represents an abstract approach to intelligence, building sophisticated models of cognition from textual and behavioral data.

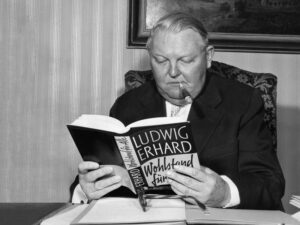

In stark contrast, the whole brain emulation (WBE) approach aims for a more direct, bottom-up reconstruction of intelligence. Popularized by researchers like Anders Sandberg and Nick Bostrom in their 2008 paper "Whole Brain Emulation: A Roadmap," WBE envisions creating an exact, one-to-one computational replica of the human brain. This ambitious endeavor, described as the "logical endpoint of computational neuroscience’s attempts to accurately model neurons and brain systems," would involve scanning a biological brain at nanometer resolution, translating its intricate structure into a functional neural simulation, and then running this simulation on immensely powerful computing hardware. The ultimate promise of WBE is not just artificial intelligence, but potentially the continuation of an individual consciousness, preserving memories, preferences, and personal identity.

The European Union’s Human Brain Project (HBP), which ran from 2013 to 2023, was a large-scale initiative aimed at advancing our understanding of the brain through computational neuroscience. While AI was not an initial core component, the project’s trajectory was undoubtedly influenced by the rapid advancements in deep learning, particularly following the "Big Bang" moment in AI with the development of AlexNet, an image recognition neural network, in 2012. AlexNet’s success, driven by massive image datasets and the parallel processing power of GPUs, revolutionized deep learning. As the HBP’s final assessment report noted, researchers found that while deep learning techniques offered systematic development paths, they often diverged from biological processes. The later phases of the HBP focused on bridging this gap, seeking to better mirror biological mechanisms. This focus aligns with the "patternist" philosophy, which posits that consciousness and identity are "substrate independent" – patterns that can be successfully emulated by computational systems. If the underlying electrophysiological models are indeed sufficient, as Sandberg and Bostrom suggest, then full human brain emulations could become a reality before the mid-21st century. This makes WBE one of the few truly bottom-up approaches to AGI, aiming to build not a model of a mind, but a mind itself.

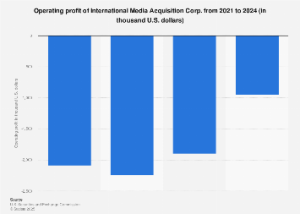

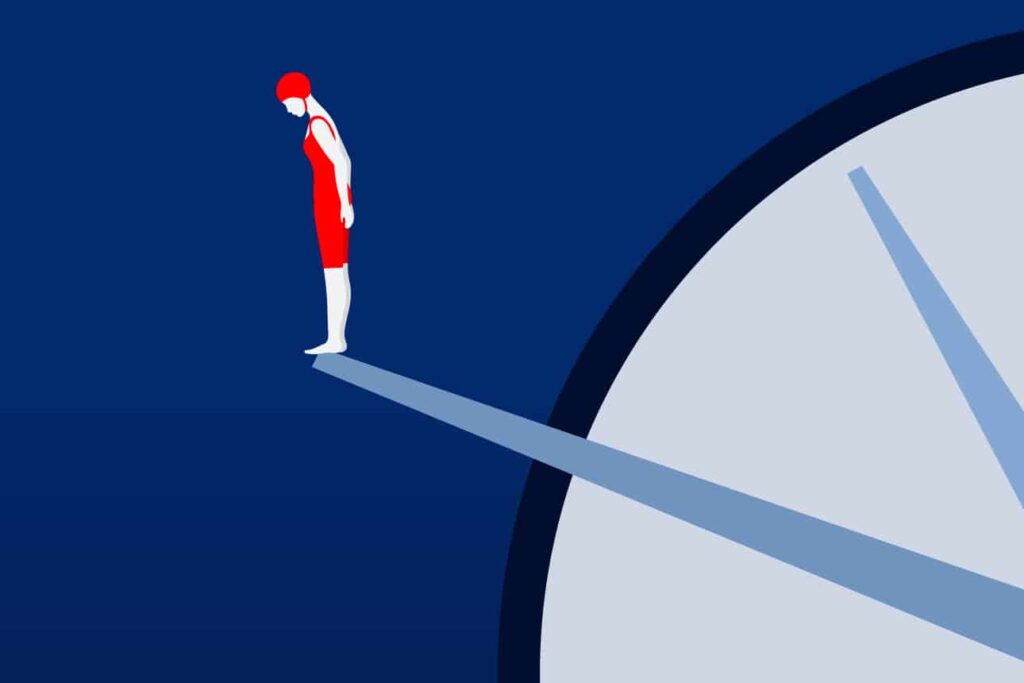

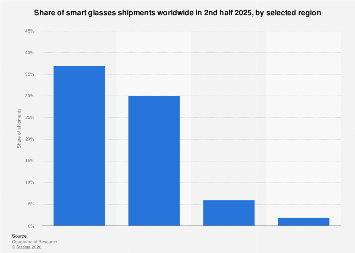

The disparity in current progress between LLMs and WBE is stark, with LLMs having achieved considerable public visibility and commercial application, while WBE remains largely in the realm of theoretical research and science fiction. This difference is partly attributable to the vastly different investment landscapes. The cost of developing advanced AI is staggering. Google has committed $15 billion to AI infrastructure in India over the next five years, while Meta has reportedly invested $14.3 billion in a deal with AI company Scale, signaling a direct pivot towards AGI development. These figures dwarf the €1 billion awarded to the Human Brain Project for its decade-long mission. Market data platforms like Epoch AI report that spending on training large-scale machine learning models is escalating at a rate of 2.4 times per year, and Pitchbook data indicates a 92% year-on-year surge in generative AI investment in 2024, reaching $56 billion.

For investors, the risk profile of AGI research is undeniably aggressive. The potential return on investment hinges not only on incremental improvements in model efficiency but also on fundamental breakthroughs in novel paradigms, including advanced memory architectures, neuromorphic computing chips, and multimodal learning systems capable of integrating context and continuity.

Ultimately, LLMs and WBE represent two distinct but potentially complementary pathways toward the ultimate goal of general intelligence. LLMs operate from a top-down perspective, abstracting cognitive functions from language and behavioral patterns, and discovering intelligence through scale and statistical structure. WBE, conversely, adopts a bottom-up approach, striving to replicate the intricate biological mechanisms that give rise to consciousness. One views intelligence as an emergent property of computation, while the other sees it as a physical process to be faithfully copied.

It is conceivable that these two hemispheres of AI research may eventually converge. Advances in neuroscience could inform the development of more biologically plausible machine learning architectures, while synthetic models of reasoning might inspire new methods for decoding the complexities of the living brain. The quest for AGI could, therefore, culminate in a synthesis of engineered and embodied intelligence.

The pursuit of AGI is, at its core, an act of profound introspection. If the patternist view holds true – that the mind is substrate-independent and reproducible as a pattern rather than a purely biological phenomenon – then its successful replication in silicon would fundamentally alter our perception of the "self." However, the implications of such a feat are immense and warrant careful consideration.

Nvidia CEO Jensen Huang’s assertion that "artificial intelligence will be the most transformative technology of the 21st century" is a bold statement, underscored by his company’s pivotal role in supplying the essential computing hardware. Yet, as the late Stephen Hawking cautioned, "success in creating AI would be the biggest event in human history. Unfortunately, it might also be the last, unless we learn how to avoid the risks." This underscores the critical need for a balanced approach, combining ambitious innovation with a deep understanding of the potential ethical and existential challenges.